International. The European Commission is funding the EMERALD Project "Process Automation and AI for Entertainment and Sustainable Media", under the Horizon Europe Programme.

The interdisciplinary consortium of seven partners includes leading companies in the film, broadcast, streaming and live entertainment technology sectors (British Broadcasting Corporation, Brainstorm Multimedia SL, Disguise Systems Ltd, Filmlight Gmbh and MOG Technologies SA), supported by two major European universities (Pompeu Fabra University and The Provost, Fellows, Foundation Scholars & The Other Members Of Board Of The College Of The Holy & Undivided Trinity Of Queen Elizabeth Near Dublin).

"EMERALD strives to pioneer innovative tools for the digital entertainment and media sectors, harnessing the potential of artificial intelligence, machine learning and big data technologies. The overall goal is to revolutionize processing, improve production efficiency, minimize energy consumption, and raise content quality through cutting-edge innovations," says Francisco Ibáñez, R+D project manager at Brainstorm. "Currently, there is a massive increase in the volume of video and extended reality-based content, with an unsustainable demand for skilled human resources, data processing, and energy. This project aims to address this challenge by developing process automation for the creation of sustainable media."

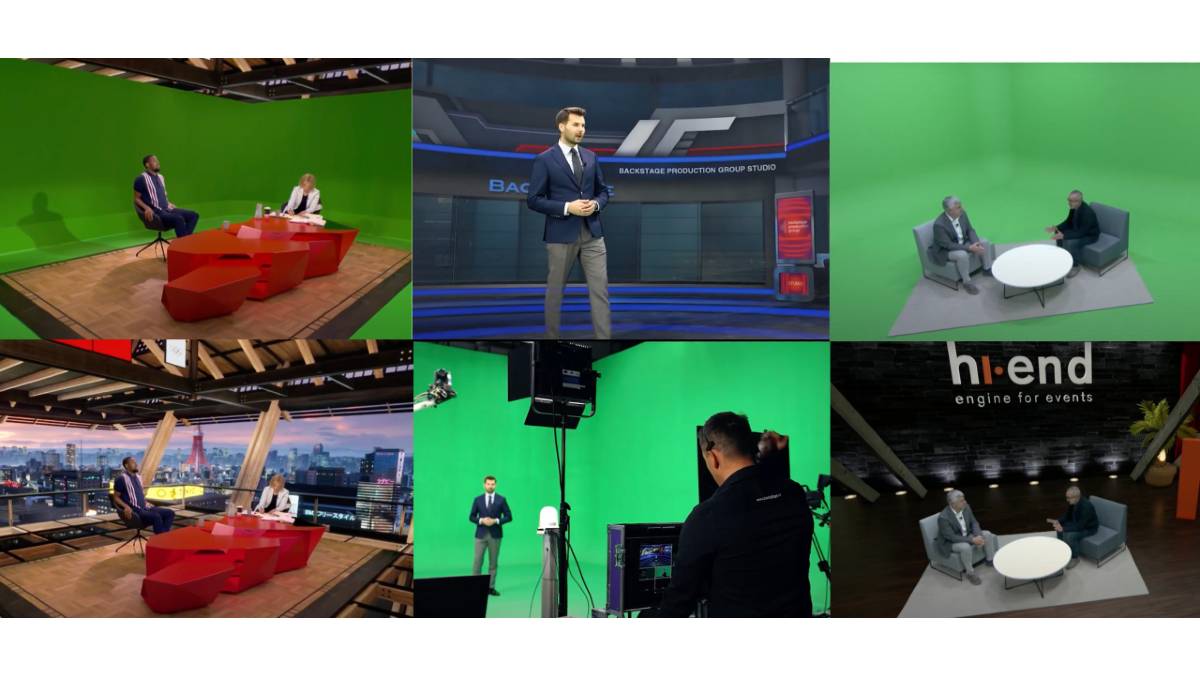

EMERALD aims to apply machine learning to automate some of the most labor-intensive tasks in video content production, which has considerable implications for both time and energy use. Javier Montesa, technical coordinator of R+D at Brainstorm, says that Brainstorm, in collaboration with Pompeu Fabra University (UPF), will develop methods and tools based on deep learning for video matting. "The main goal is to produce high-quality results for real-time automatic integration of remote presenters or performers into virtual scenes and sets for broadcast and streaming media using deep learning without the need for a "trimap." With BRA's InfinitySet AI enhancement, we'll bring the quality of green screen methods to simpler settings."

Javier explains that Brainstorm has plans to integrate InfinitySet with UPF's deep learning systems for head and body pose estimation that will allow the operator to trigger content that will be automatically displayed on different parts of the scene, virtual screens, 3D graphics placeholders, or simply in front of the presenter as they move. Francisco adds that "new ways will be explored to improve the insertion of the presenter, change their silhouette and calculate their shadow with more precision and realism."

In addition, Brainstorm will be involved in the creation of tools designed for automatic color balancing and shot matching. Color manipulations are necessary in post-production, in virtual productions, and in virtual broadcast studios. Javier states that automating the presenter's color grading to match the virtual scene will be especially valuable for virtual broadcast studios that don't have colorists. "Integrating this automatic color correction into InfinitySet will simplify the use of the tool and improve presenter-scene integration when lighting conditions are uncontrolled or when virtual scene lighting conditions are destined to vary during a program."

The progress of the project and the results obtained can be followed in https://www.upf.edu/web/emerald

Leave your comment